Video Building Blocks: Video Compression – Part 2

Published November 13, 2020

Welcome to part two of video compression!

Last week we learned video compression is an algorithm to reduce the size of a video with the goal of not losing quality. We learned about the interframe method for compressing a video. This week, we’re going to look at some of the specifications for encoding a video and another compression method!

What is a video codec?

Video compression algorithms are a type of codec. A codec is a portmanteau of “compression” and “decompression.” A video codec writes (encode) and reads (decode) video information. There are standard specifications of video codecs and there are specific implementations of those standards. Imagine it like this: the codec specification is a general burger. The implementation is the Big Mac or Whopper, specific recipes of the general burger.

You probably haven’t seen these codecs unless you’re in the professional video field but these are some of the most popular:

- H.264 (AVC) – The standard for the internet! Renders great quality for 1080p video with small bandwidth and is widely accepted

- H.265 (HEVC) – The up and coming standard for the internet. Allows 4K streaming but isn’t widely compatible yet

- VP9 – An open and royalty free codec by Google, contending to be the internet standard

- Apple ProRes – A proprietary codec from Apple to use in video post-production

What is a container?

The container, which you’re probably more familiar with, houses all the audio, video, and metadata information so a specific system can understand it. Some containers are broadly compatible while others can only be played on a specific piece of hardware or software.

For example, if you wanted to open up a .MOV file on a Microsoft computer, you would need a special reader which translates the Apple language to the Microsoft language to view the video. Below are some of the common video containers:

- MPEG: .mp4

- Microsoft: .avi, .wmv

- Apple: .mov, .qt

- Google: .webm

Why does this matter?

If you’re not in the professional video industry, it’s still good to have a basic understanding of how the days worth of video gets streamed every minute online. If you’re uploading content to Facebook or YouTube, knowing which formats allow highest quality and minimal storage while be compatible can help you be more efficient with your productions.

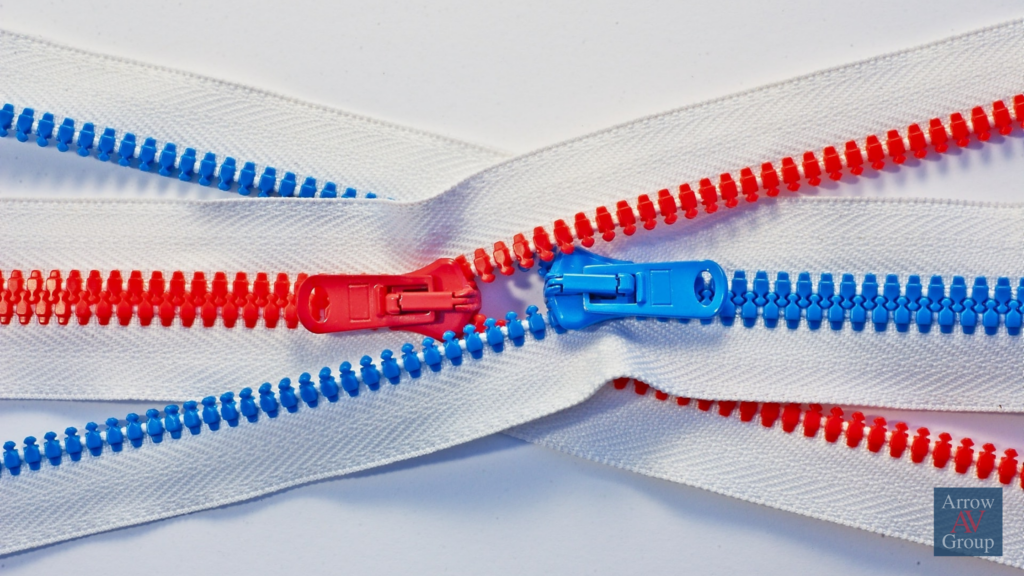

What is chroma subsampling?

Before we move on from video compression, we must visit another method you might come across if you ever want to run AV-over-IP. Chroma subsampling is a compression method created on the fact humans can detect greater differences in brightness than in color, or luminance and chrominance respectively. This method separates a video into three separate images for compression: the luminance (Y) and two colored images (Cb and Cr). The luminance image is unchanged, leaving high levels of detail. The colored images are compressed, losing detail, but when combined again with the luminance image, regains clarity.

There are three standard formats for chroma subsampling.

- 4:4:4 – The highest quality with no compression whatsoever

- 4:2:2 – A moderate quality with the colors being compressed in half

- 4:0:0 – A low quality with the colors being compressed to the maximum.

Bottom Line: Know the system you’re using and choose the compression methods that works best for you.

And we’ve done it! We’ve looked at every property of a video from frame to compression and made it out alive. Thanks for sticking with us through some of the more technical parts.

Don’t care about the technical details but still want to get great video to your community? Live streaming? Digital signage? Presentation? Send us an email and we’ll help you design a system that just works!

Sources & Further Info

- Video Compression | Wikipedia

- What is Video Encoding? Codecs and Compression Techniques | IBM

- Video Compression Standards – Pros & Cons | Mistralsolutions.com

- How Video Compression Works | EETimes.com

- Getting to Grips with Chroma Subsampling | CineD

Want to get these articles a week in advance with extra deals and news? Sign up for our weekly newsletter, the Archer’s Quiver, and we’ll send you a FREE AV guide.

Get Expert AV Assistance

Latest Blogs

About Arrow AV Group

We are a premiere audiovisual integration firm serving corporate, government, healthcare, house of worship, and education markets with easy-to-use solutions that drive success. Family-owned and operated from Appleton, WI for over 35 years.